-

PROVIDERS

New MRD Medicare Coverage for Select Indications*

*When coverage criteria are met. Additional criteria and exceptions for coverage may apply.

-

LIFE SCIENCES

REGISTER NOW

Ask a Scientist:

How to leverage novel datasets to answer complex research questions -

PATIENTS

It's About Time

View the Tempus vision.

- RESOURCES

-

ABOUT US

View Job Postings

We’re looking for people who can change the world.

- INVESTORS

07/24/2025

Q&A: How transcriptomics and AI are accelerating oncology R&D

In a recent webinar hosted by Tempus, industry leaders from healthcare and life sciences gathered to explore current and emerging applications of transcriptomic data in oncology.

Authors

Justin Guinney, PhD

SVP, Cancer Genomics, Tempus

Rafael Rosengarten, PhD

CEO & Co-Founder, Genialis

Sandip P. Patel, MD

Professor of Medical Oncology, UC San Diego

SVP, Cancer Genomics, Tempus

Rafael Rosengarten, PhD

CEO & Co-Founder, Genialis

Sandip P. Patel, MD

Professor of Medical Oncology, UC San Diego

Justin Guinney, PhD, SVP of Cancer Genomics at Tempus, moderated the session and was joined by Rafael Rosengarten, PhD, CEO & Co-founder of Genialis, and Sandip P. Patel, MD, Professor of Medical Oncology, UC San Diego. The conversation centered on the exploration of transcriptomic data applications that are helping to change both oncology drug development and patient care.

RNA-seq’s utility has increased in oncology, offering a dynamic and functional understanding of cancer that extends beyond traditional genomic analyses. By measuring gene expression and identifying critical gene fusions, RNA-seq provides a more comprehensive view of tumor biology, classification, and progression. This capacity to reveal active biological processes and potential therapeutic vulnerabilities is contributing to advancements in precision cancer diagnostics and the acceleration of drug development.

Justin Guinney, PhD: Rafael, given Genialis’ focus on using RNA to predict patient response to therapy, what do you see as the most significant untapped potential going forward for large-scale RNA sequencing? |

| Rafael Rosengarten, PhD: The biggest untapped potential, which we’re seeing take off, is clinically relevant RNA profiling tests, likely to be interpreted by AI-driven algorithms. While RNA’s role in detecting structural variants can be therapeutically meaningful — and more information is generally good for understanding disease — in a regulated environment, interpretability is paramount. The FDA wants interpretable answers, not just raw data. This is where AI’s advancements in algorithmic science become valuable. I’m especially excited about robust, predictive ensemble models of complex signatures that profile therapeutically vulnerable tumor biology. Just like with imaging-based AI devices, I anticipate a dramatic increase in FDA-cleared RNA-seq-based tests in the coming decade. |

Justin Guinney, PhD: Sandip, from your perspective, when you think about the potential for RNA in driving research, what do you see as the biggest opportunity to impact clinical needs in oncology? |

| Sandip P. Patel, MD: RNA-seq is used for diagnosing certain actionable fusions, which may be exquisitely sensitive to targeted therapy; some targets, like NRG1, are predominantly diagnosed this way. For research, there are many potential applications. RNA-seq can inform antibody-drug conjugate efficacy, and it’s a very interesting area for immunotherapy, given the lack of good DNA or protein biomarkers; it helps understand immune composition in both tumor and stroma. As Rafael mentioned, we’re focused on the central dogma, and with cancer, you can’t leave any stone unturned. We need to consider DNA, RNA, and protein; the best approach depends on the use case. We’d be remiss not to explore all three aspects of tackling cancer. |

|

The integration of transcriptomic data and AI-driven algorithms is helping pave the way for advancements in oncology research and development. To learn more about how Tempus is driving innovation and improving patient outcomes through precision medicine, click here or contact us.

*Note: content edited for clarity.

Please note that the content in this document has been revised for clarity and conciseness. Some language and formatting may have been adjusted to enhance readability while preserving the original meaning and intent of the discussion.

Forward-Looking Statement

This press release contains forward-looking statements within the meaning of Section 27A of the Securities Act of 1933, as amended (the “Securities Act”), and Section 21E of the Securities Exchange Act of 1934, as amended, about Tempus and Tempus’ industry that involve substantial risks and uncertainties. All statements other than statements of historical facts contained in this press release are forward-looking statements, including, but not limited to, statements regarding the future applications of RNA sequencing; the potential for AI, including algorithms and foundation models, to refine treatment strategies and accelerate drug discovery; and the promise of new research methodologies that integrate real-world data with patient-derived models. In some cases, you can identify forward-looking statements because they contain words such as “anticipate,” “believe,” “contemplate,” “continue,” “could,” “estimate,” “expect,” “going to,” “intend,” “may,” “plan,” “potential,” “predict,” “project,” “should,” “target,” “will,” or “would” or the negative of these words or other similar terms or expressions. Tempus cautions you that the foregoing may not include all of the forward-looking statements made in this press release.

You should not rely on forward-looking statements as predictions of future events. Tempus has based the forward-looking statements contained in this press release primarily on its current expectations and projections about future events and trends that it believes may affect Tempus’ business, financial condition, results of operations, and prospects. These forward-looking statements are subject to risks and uncertainties related to: Tempus’ financial performance; the ability to attract and retain customers and partners; managing Tempus’ growth and future expenses; competition and new market entrants; compliance with new laws, regulations and executive actions, including any evolving regulations in the artificial intelligence space; the ability to maintain, protect and enhance Tempus’ intellectual property; the ability to attract and retain qualified team members and key personnel; the ability to repay or refinance outstanding debt, or to access additional financing; future acquisitions, divestitures or investments; the potential adverse impact of climate change, natural disasters, health epidemics, macroeconomic conditions, and war or other armed conflict, as well as risks, uncertainties, and other factors described in the section titled “Risk Factors” in Tempus’ Annual Report on Form 10-K for the year ended December 31, 2024, filed with the Securities and Exchange Commission (“SEC”) on February 24, 2025, as well as in other filings Tempus may make with the SEC in the future. In addition, any forward-looking statements contained in this press release are based on assumptions that Tempus believes to be reasonable as of this date. Tempus undertakes no obligation to update any forward-looking statements to reflect events or circumstances after the date of this press release or to reflect new information or the occurrence of unanticipated events, except as required by law.

-

12/11/2025

Driving enterprise value with RWD

Hear from biotech CEOs and VC leaders as they discuss how real-world data can inform strategic decision-making in biotech companies.

Watch replay -

11/11/2025

A new era of biopharma R&D: The TechBio revolution—realities and the next frontier

Join Tempus and Recursion leaders to explore their strategic TechBio partnership. Learn how they use AI and supercomputing with petabytes of data to accelerate drug discovery and development. See the impact on biopharma R&D's future.

Watch replay -

11/14/2025

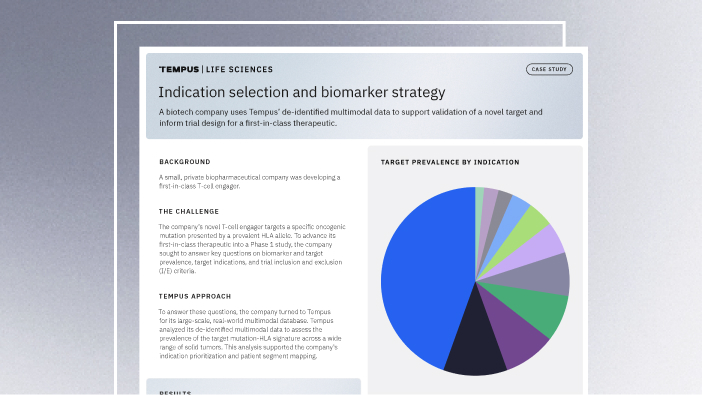

Validating a novel target and informing trial design for a first-in-class therapeutic

Discover how a biopharma company used Tempus’ de-identified multimodal data to support validation of a novel target and inform trial design for a first-in-class therapeutic.

Read more