-

PROVIDERS

REGISTER NOW

Navigating new frontiers in breast cancer care pathway intelligence: The role of providers and AI

Tuesday, July 29th

2:00pm PT, 4:00pm CT, 5:00pm ET -

LIFE SCIENCES

REGISTER NOW

Navigating New Frontiers in Breast Cancer Care Pathway Intelligence: The Role of Providers and AI

Tuesday, July 29

2pm PT, 4pm CT, 5pm ET -

PATIENTS

It's About Time

View the Tempus vision.

- RESOURCES

-

ABOUT US

View Job Postings

We’re looking for people who can change the world.

- INVESTORS

08/23/2022

Navigating biomarker validation

Attaining biomarker validation is not a one-size-fits-all process. Find out how to optimize a biomarker to increase its chance of widespread adoption.

Authors

Jonathan Dry

Vice President of Scientific Discovery, Tempus

Vice President of Scientific Discovery, Tempus

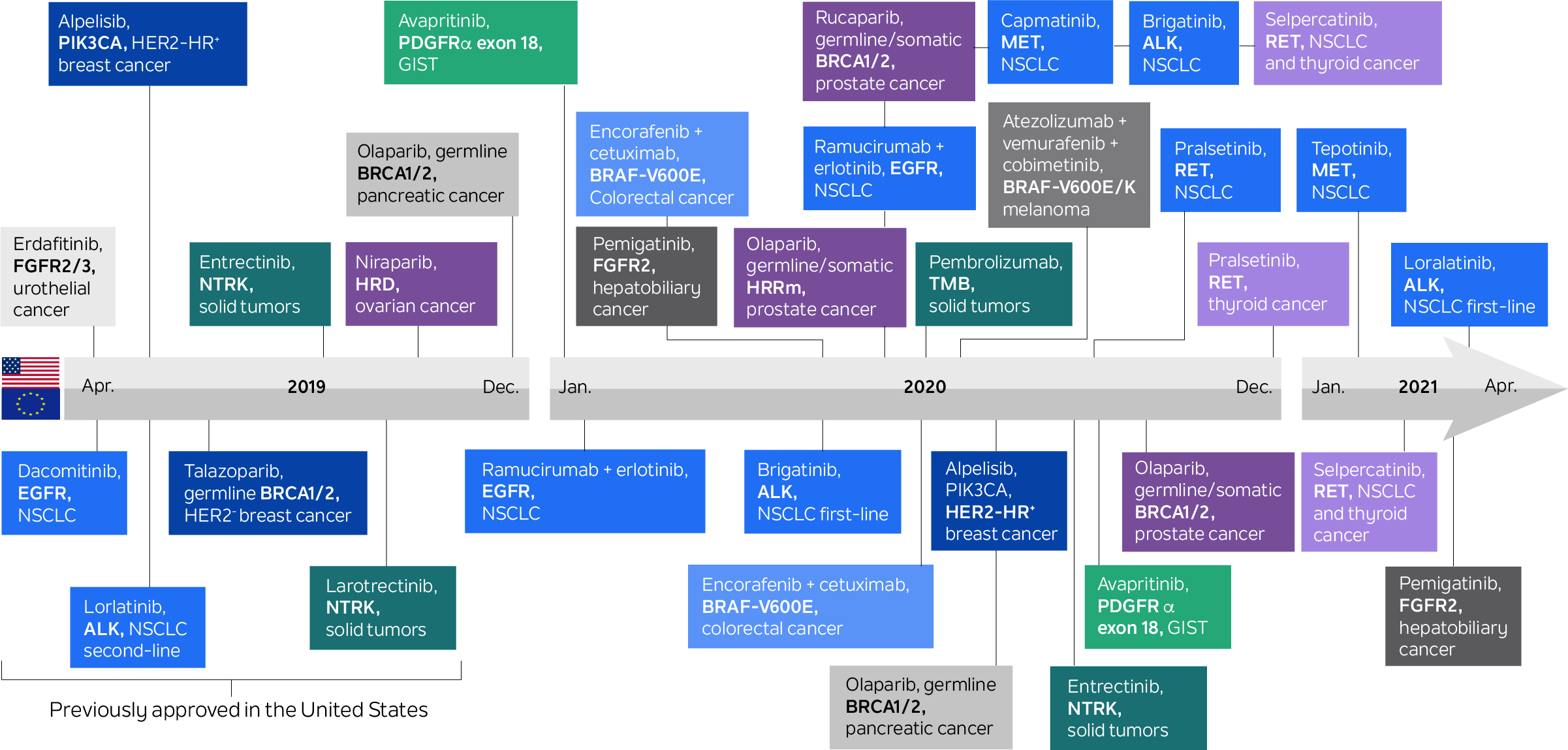

In the last two decades, multiple targeted therapies have become available to cancer patients based on biomarker-determined eligibility. Biomarker-guided therapeutic choice has played a significant role in oncology for much longer than that: For example, hormone receptor status has been used to direct hormonal therapy in breast cancers for over four decades. With the advent of genomic testing, this paradigm has increased dramatically. Today, there are more than 35 drugs on the U.S. market for genetically determined oncology indications.

Figure 1. Medical products in U.S. and the European Union for genetically determined oncology indications

Source: Mateo, J., Steuten, L., Aftimos, P. et al. Delivering precision oncology to patients with cancer. Nat Med 28, 658–665 (2022). https://doi.org/10.1038/s41591-022-01717-2

The emergence of cancer immunotherapies represents a new level of complexity for the patient selection biomarker paradigm. Environmental exposures, heritable traits, comorbidities, concomitant therapies, neoantigen load, immune checkpoint expression, cellular interactions, the tumor microenvironment’s physical characteristics, and the microbiome have all been shown to influence the immune system’s role in tumorigenesis and response to immunotherapies.

Therefore, to adequately determine optimal immunotherapy, it may be necessary to capture a sufficiently full picture of this complex biology, requiring researchers or providers to integrate heterogeneous data sources alongside genomics. Such a multimodal biomarker approach may also be required to select optimal combinations of therapies, aiming to tackle the full spectrum of tumor and immune hallmarks and clonal heterogeneity driving a patient’s disease or comorbidities.

Biomarkers and their applications come in many shapes and sizes, and can include:

- Tools used in research and tools determining clinical decisions

- Direct measures of drug targets and indirect determinants of dependency

- Single measurements of discrete assays as well as complex algorithms over multimodal ‘omic platforms

- Screens for disease risk and early-stage disease detection

- Predictions of optimal therapies and surrogate measures of drug response

- Monitoring for disease progression or minimal residual disease as well as diagnosis of drug resistance

- Dynamic measures of drug engagement and indicators of drug efficacy

- Assessment of patient wellness and determinants of adverse event risk

- Disease-specific targets as well as biomarkers transcending classical histological definitions

- Noninvasive, real-time biometrics as well as tests requiring invasive tissue collection

- Ex vivo or in silico avatar simulations of outcomes to therapy

In this article, we focus on designing validation workflows to increase probability of success of predictive biomarkers.

When contemplating the appropriate path to validate a biomarker of interest, it is important to have clear expectations on how the biomarker will be applied, by whom, and for what purpose. Several decisions can subsequently be made in the design of development and validation processes to maximize the probability of success for the intended application.

Here, we will discuss how the intended use of a biomarker may influence choices in the process of its validation, including:

- Biomarker purpose

- Hardware vs. software

- Performance metrics

- Cohort design and generalizability

- Ease of use

- Operational considerations

- Reimbursement

Biomarker purpose: It is important to understand the goal for a biomarker, plus any risk associated with the test, when assessing the appropriate route for validation:

- For research use only

- As an exploratory clinical endpoint

- To determine therapeutic eligibility

Understanding the biomarker’s goal, along with its complexity and past precedent, will determine the type and level of regulation required for the respective test and resulting necessary steps in validation.

Hardware vs. software: It can be helpful to separate the hardware or physical platform with which measurements are taken from the software or algorithm with which recommendations are determined from resulting measurements. In many cases, a biomarker may rely on measurements taken from hardware that would be independently validated. In these cases, new validation plans need only focus on the novel algorithm.

Performance metrics: Performance assessment includes evaluation of the test’s technical reproducibility and its analytical precision, sensitivity, specificity, and selectivity. It is essential to consider the intended application when choosing the statistical endpoint against which a biomarker is optimized and validated.

For example, a biomarker used to screen patients for triage to a more rigorous test may aim to identify every patient who could benefit, and so be less tolerant of false negatives than false positives. The biomarker would need to be validated to perform with high sensitivity, recall, or F3 statistics. However, a biomarker used to determine therapeutic administration, particularly where that will involve a choice between alternative therapies, may aim to ensure that all patients identified with the biomarker are likely to benefit and so be intolerant of false positives. In this case, the biomarker needs to be validated to perform with high specificity or precision. For other purposes, overall performance may be most appropriate, in which case validation may focus on accuracy, area under the receiver operating characteristic curve (AUC), or F1 statistic.

Alongside the intended application, it is also essential to consider any error in the truth measure against which performance is evaluated and the effect that may have on the sensitivity or specificity that can be expected. The stability of the assay to operational variation, as well as instrumentation performance over time and between sites, must also be established.

Cohort design: Training/testing cohorts and clinical trials can often over- or underrepresent certain populations. For example:

- Inclusion/exclusion criteria may be introduced to reduce confounding factors and ensure validation criteria are cleanly tested

- There may be temporal drift in performance if training data was collected during a fixed period of time

- Operational choices, such as choice of research center and locations for studies, may bias towards specific practice patterns, populations of particular ancestry, or socioeconomic status

These restrictions may introduce a context dependency to the validation result and subsequently reduce the population in which the biomarker can be assumed performant. It is essential, therefore, to consider additional assessments of a biomarker’s generalizability to the full diversity of the real-world population for which it is intended.

Ease of use: A result’s actionability (i.e., the clarity with which it supports a key decision by the respective healthcare professional) is essential to consider. Key considerations include:

- The level of performance required for the biomarker-driven choice to be considered better than the alternative

- The threshold at which a result will be converted into a decision to change treatment or determine reimbursement

For example, in an orphan designation with no alternative current therapeutic choice, a lower performant model may be acceptable for decision making. On the other hand, a biomarker to determine choice between therapies will need to perform at a much higher level. Furthermore, a biomarker determining avoidance of therapy, particularly if in an indication where no alternative effective therapy may be available, may need even greater precision to influence treatment decisions.

Explainability (i.e., the ease of interpretation) can also be critical to adoption of a biomarker. A test result providing a binary yes/no directing an objective positive treatment decision may be more easily adopted than a continuous score, where interpretation can be subjective or context dependent. In addition, experts in the field may be more accepting of less extensive validation if the measurement in question has simple and clear biological rationale for the treatment choice. Complex, multivariate algorithms or black-box models may require more robust validation evidence and a higher level of customer advocacy and education to drive adoption.

Operational considerations: In addition to the performance and actionability of the biomarker, many factors that influence its approval and uptake into real-world practice can be considered in up-front design and validation to ensure the resulting data best supports adoption. Foremost among these factors are the test’s cost and practicality. For example:

- A biomarker that can be derived from measurements already taken in routine practice has the potential to be readily and inexpensively implemented, reducing screening failures due to an inability to perform new tests. For instance, by using standard clinical/demographic/epidemiological data, labs or medical imaging, or even data from a comprehensive genomic platform at a point in care for which it is already reimbursed or recommended in clinical guidelines, one might avoid the need for a new measurement to be taken in order to test the biomarker of choice. However, such an approach would need to consider the variations of data collection standards, assays, and readers or data processing pipelines run in different labs. Validation may need to include extensive concordance studies.

- Similarly, a test with a rapid turnaround time for results will cause less delay and disruption to alternative clinical decisions, and will be more likely to be adopted within a clinical workflow as a result.

- For a new assay, adoption will be facilitated if biomaterial already collected in standard clinical practice can be used. However, one then needs to consider how validation results will justify the choice of this assay over others competing for use of the same biomaterial.

- Concordance will be improved if a kittable assay can be sent to the clinical site, or if the biosample can be shipped for profiling at a single controlled site. However, one then needs to consider the additional labor required for implementation.

- For a test requiring new biomaterial, it may be pertinent to explore validation in biosamples that can be less invasively collected as part of standard clinical practice. Examples include switching from a tissue-based test to a blood-based test, from fresh tissue to fixed tissue, or from an interventional time point to a time of routine biosample collection (e.g., at diagnosis).

Reimbursement: It is critical to clearly understand who will pay for the biomarker and what validation, clinical utility, and cost effectiveness data are required to justify payment. This can be complex, particularly if there is intent for equal access to the biomarker across multiple locations where payers and guidelines vary. It may also be pertinent to consider first seeking reimbursement in a discrete context that may pave the route to adoption from broader and commercial entities.

Throughout the validation process, choices can be made to reduce the ultimate cost of the biomarker to the payer. In addition, one can consider the value returned to the payer through the biomarker’s use. For example, a biomarker offering a more precise diagnosis that focuses treatment choice, or a broad panel enabling multiple biomarkers to be read from a single assay output, may ultimately save the payer from reimbursing multiple futile alternative tests, treatments, and procedures.

Summary: Biomarkers come in multiple shapes and sizes, and no one path to validation suits them all. Decisions among hardware, software, performance metrics, cohort design, generalizability, ease of use, operational considerations, and other characteristics can be tailored to suit the specifics of the measure being taken and the context in which a test will be applied. The optimization of a predictive biomarker, and the data gathered for its validation, can increase the chance that a biomarker test will be adopted and/or reimbursed for the intended purpose.

Depending on your commercialization goals, you may seek FDA authorization of the biomarker; there are many paths to consider, including cleared or approved companion diagnostic and approval/clearance of a standard-alone biomarker test. Contact Tempus to discuss these considerations with one of our regulatory experts.

Learn more

Learn more

Applications for multimodal real-world data

De-risking clinical trials and unlocking new knowledge about diseases

DOWNLOAD GUIDEStay informed

Be notified whenever Tempus publishes new and relevant research, webinars, and other resources.

Sign up-

07/01/2025

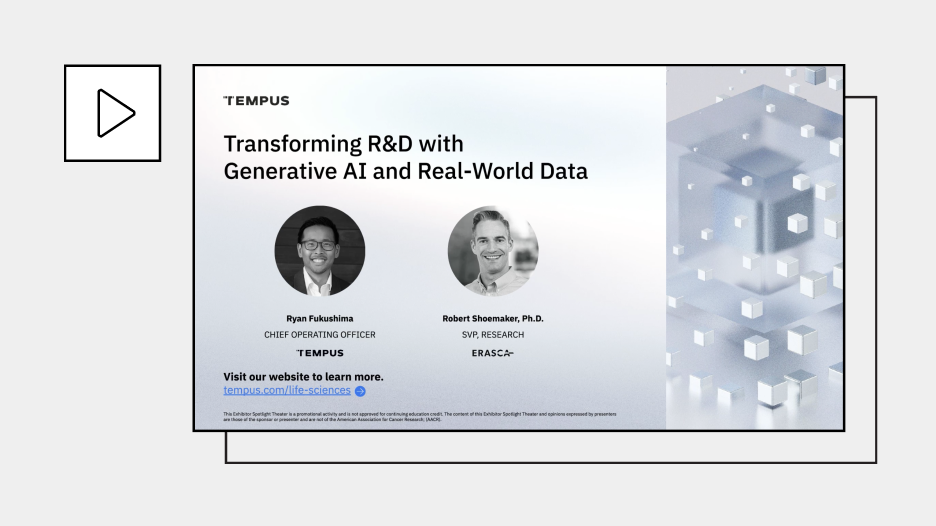

Transforming R&D with Generative AI and Real-World Data

Mike Yasiejko, General Manager and Executive Vice President at Tempus, recently spoke with Andrew Mazar, PhD, Chief Operating Officer at Actuate Therapeutics, about the ways Tempus’ liquid biopsy (xF+) and methylation assays have been valuable to Actuate’s work and how the company has benefitted from the partnership

Watch now -

06/12/2025

AI & ML in action: Demonstrating real-world impact in trial design & patient care

Discover how the Tempus platform leverages AI and ML to inform standard of care practices through health equity guidelines and drive insights that help refine clinical trial design. Engage with live demonstrations showcasing how our tools identify patients by modifying inclusion/exclusion criteria and leveraging patient queries. Explore how our tools integrate NCCN guidelines and empower life science teams to access current, actionable patient-journey insights. Learn how these real-world applications can drive progress in your clinical development initiatives.

Watch replay

Secure your recording now. -

06/09/2025

Bridging the translational gap: The role of organoids in oncology R&D

This white paper explores the evolving role of organoids in oncology R&D, highlighting their potential as predictive preclinical models and their ability to reduce translational risk. Download for a comprehensive overview of the scientific landscape, key adoption barriers, emerging innovations, and how pharma companies leverage organoids to accelerate precision medicine.

Read more